DIY A Multiview Camera System:

Panoptic Studio Teardown

Tutorial, CVPR 20178:30 AM - 12:30 PM, July 21, Friday

Hawaii Convention Center, Room 303AB

Tutorial Video

Note: tutorial slides are also available below.

Invited Speakers

Takeo Kanade

Carnegie Mellon Univerity

Thabo Beeler

Disney Research Zurich

Derek Bradley

Disney Research Zurich

Christian Theobalt

MPI Informatik

Organizers

Hanbyul Joo

Carnegie Mellon Univerity

Tomas Simon

Carnegie Mellon Univerity

Hyun Soo Park

Univervity of Minnesota, Twin Cities

Shohei Nobuhara

Kyoto University

Yaser Sheikh

Carnegie Mellon University

Description

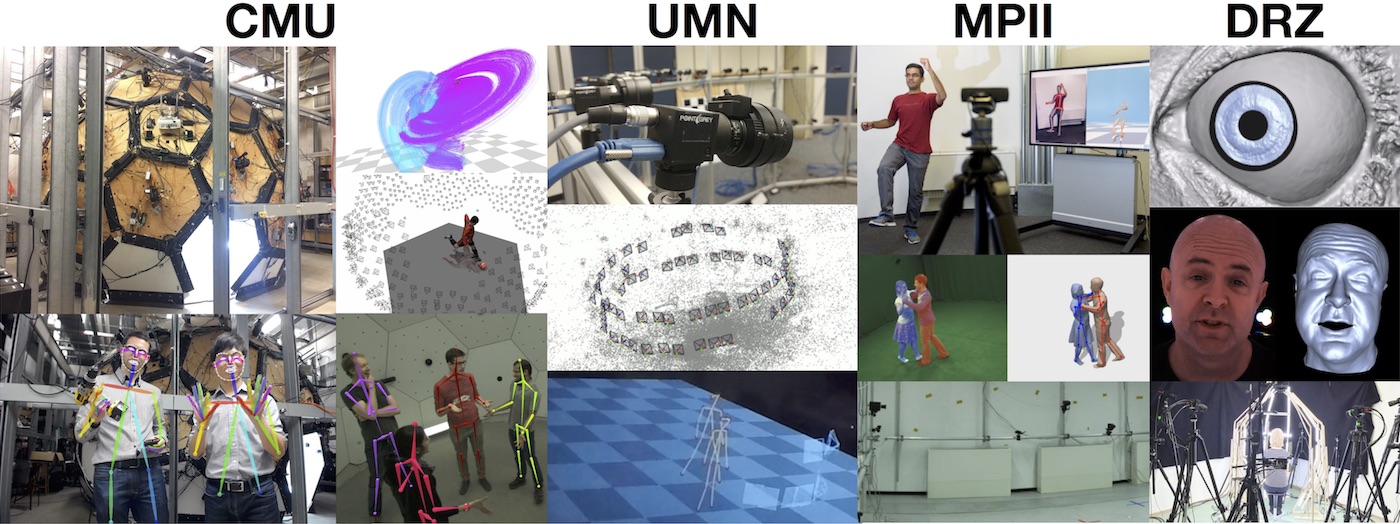

The tutorial will cover a wide spectrum of multicamera systems from micro to macro. We will first cover technical hardware issues that are common across systems, such as synchronization, calibration, and data communications, and then we will discuss hardware design factors, such as camera placement, resolution, and framerate, which are strongly related to visual representations. We will also discuss algorithmic challenges associated with the design factors, e.g., matching, tracking, and reconstruction. Three case studies will be conducted: DRZ (submillimeter, hair and eye), MPII (centimeter- meter, single person), and Panoptic Studio (meter, more than 5 people). In particular, we will use the Panoptic Studio as a primary example where we will demonstrate a modular system at the venue. Three distinguished speakers from CMU, MPII, and DRZ are invited. To this end, we will release 3D data and its computational challenges regarding dynamic scene matching, 3D reconstruction, and micro pose/activity recognition.

Program

- How to select cameras?

- How many cameras do we need?

- Where to place cameras?

- How to synchronize cameras?

- How do the cameras communicate?

- How to design storage system?

- How to design and run capture software?

- How to calibrate multiview camera system?

- How to build multiple kinect system (sync and calibration)?

- 3D Volume Reconstruction

- 3D Trajectory Reconstruction

- 3D Keypoint Reconstruction

- Multiview Bootstrapping

- Why do we need multiview system in deep learning era?

- The Panoptic Studio Dataset

- How to build a high-end multicamera system with consumer grade equipment (no specialized hardware) and open source software?

- How to achieve real time computation through a cost effective single board computer (e.g., Raspberry Pi, Odroid, nVidia TX2)?

- How much does it cost?

- How can I contribute to the multicamera system community in terms of hardware design and software?

| Time | Topic | Presenter(s) | Slides |

|---|---|---|---|

| 8:30-8:45 | Opening | Yaser Sheikh, Hyun Soo Park | [PDF] |

| 8:45-9:05 | Invited Talk: Many-Camera Systems: How They Started | Takeo Kanade | [PDF] |

| 9:05-9:30 | Design Principles for Multiview System, Hardware Design, Structure, Synchronization, and Networking |

Shohei Nobuhara | [PDF] |

| 9:30-10:00 | Invited Talk: New Methods for Marler-less Motion and Performance Capture and the Multi-Camera Studio Behind

Absract: In this talk, I will review some of our work on marker-less motion and performance capture with multi-view video. While originally, we looked into methods working in more or less controlled indoor settings, we recently began to look into approaches for motion and performance capture that work in less constrained and more general outdoor scenes, with a much lower number of cameras, even a single one. As an example, I will show our recent VNect approach (SIGGRAPH 2017), the first method for real-time 3D human motion capture with a single webcam. All our works depend on a versatile multi-view video studio for capturing data. I will show how this studio looks like and what guided our studio design decisions. |

Christian Theobalt | |

| 10:00-10:30 | Data/storage, Capture System, Calibration, Multiple Kinect System

|

Hanbyul Joo, Tomas Simon | [PDF] |

| 10:30-11:00 | Coffee Break | ||

| 11:00-11:30 |

Invited Talk: Multi-view Capture for High Resolution Digital Humans

Absract: Creating realistic digital humans is becoming increasingly more important in the visual effects industry. Capturing the likeness of an actor using multi-view reconstruction is now a standard procedure. In order to help avoid the infamous uncanny valley for the digital character, capturing the shape and motion at the highest possible quality and resolution is a necessity. In this talk we will briefly outline a number of capture setups and corresponding reconstruction algorithms for capturing actors in high resolution. We will touch upon the particulars of different setups, including cameras, lighting, synchronization, calibration, portability, and comfort for the actor. We will primarily focus on facial capture, but also dive a little into reconstructing hair and eyes. As part of this talk we will describe our Medusa Facial Performance Capture System, currently in use by Industrial Light & Magic and already demonstrated on over a dozen feature films. |

Thabo Beeler, Derek Bradley | |

| 11:30-12:00 | 3D Reconstruction Methods, Multiview Bootstrapping, and Panoptic Studio Dataset

|

Hanbyul Joo, Tomas Simon | [PDF] |

| 12:00-12:30 | DIY A Multiview System and Demo

|

Hyun Soo Park | [PDF] |

References

|

OpenPose Library

|

|

Hand Keypoint Detection in Single Images using Multiview Bootstrapping

|

|

Panoptic Studio: A Massively Multiview System for Social Motion Capture

|

|

MAP Visibility Estimation for Large-Scale Dynamic 3D Reconstruction

|